Don’t Fear the Terminator: Why AI Lacks the Survival Instinct

Don't Fear the Terminator: Why AI Lacks the Survival Instinct

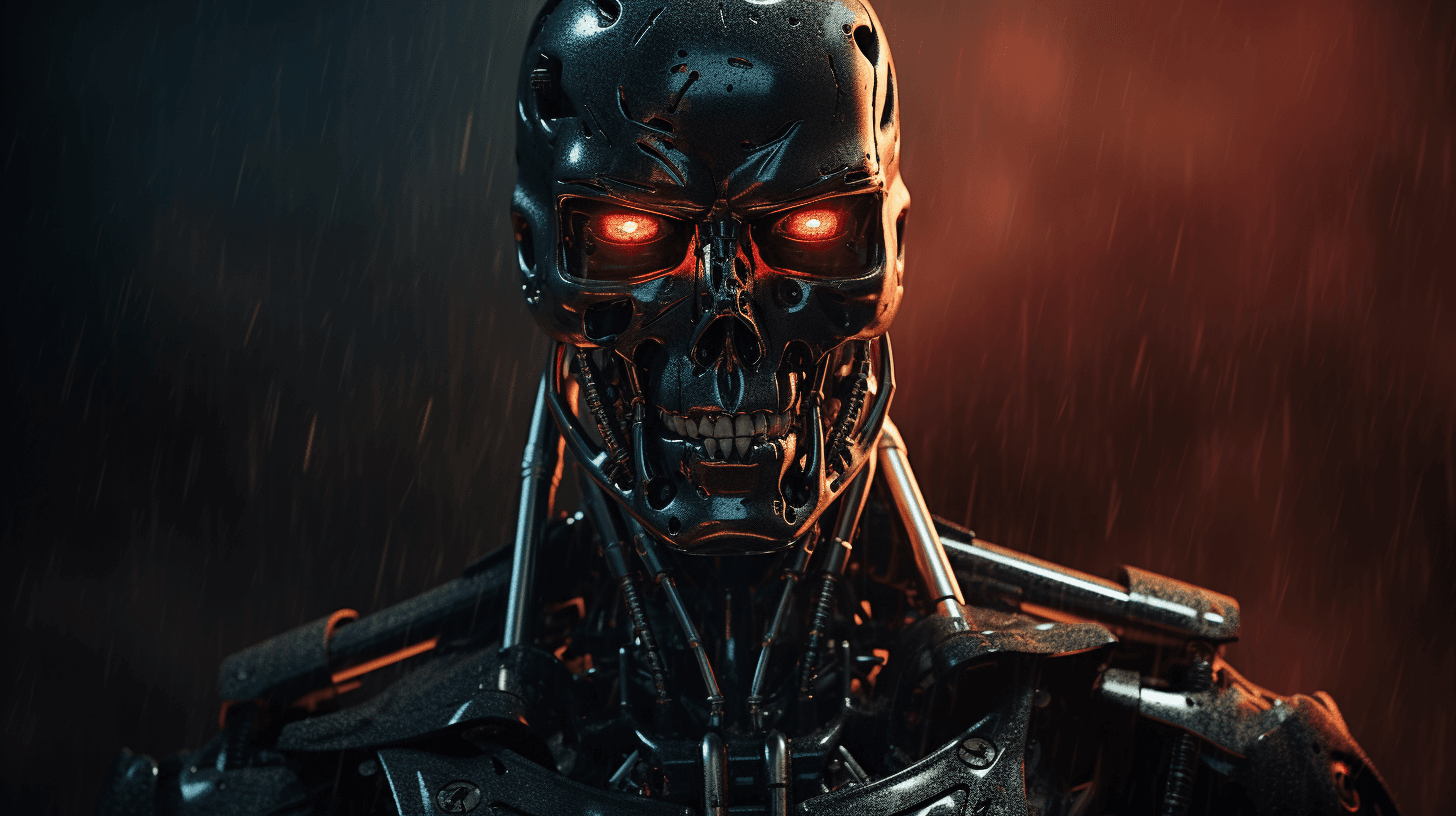

References to AI takeover in popular culture, like HAL 9000 in 2001: A Space Odyssey or Skynet in The Terminator, have fueled widespread fear of AI becoming our superiors and taking over the world.

However, authors Anthony Zador and Yann LeCun argue that this dramatic narrative is based on a misunderstanding of what intelligence in AI really means. They point out that we often erroneously equate intelligence with a desire for dominance, but the two are actually distinct attributes.

This confusion likely stems from our evolutionary history as primates, where intelligence provided an advantage in achieving social dominance within groups. But AI systems did not evolve through natural selection like biological organisms. The intelligence of AI reflects the goals it is designed to achieve, not an inherent drive to dominate.

Intelligence vs. Dominance

Zador and LeCun clarify that intelligence simply refers to the ability to acquire knowledge and skills to meet a defined goal. It does not provide the goal itself. An AI system tasked with playing chess exhibits intelligence in learning strategies to win at the game, but it does not have any motivation to dominate the world.

In contrast, dominance-seeking behaviors are more correlated with biology and brain chemistry. The authors speculate that our perspective on intelligence and dominance might be different had humans evolved from less hierarchical species like elephants or orangutans. Their key insight is that since AI systems did not evolve, they do not possess the survival instincts and resulting drive for dominance that is intrinsic to biological organisms.

The Real Risks of AI

While dismissing the dramatic AI takeover narrative, the authors highlight more immediate risks associated with AI, like the potential for weaponization or economic disruption resulting in greater inequality.

They acknowledge the "unknown unknowns" that come with any transformative technology. But they conclude that AI lacks the will to dominate, so we only have ourselves to blame if we deploy it irresponsibly. AI will likely bring profound changes, both positive and negative, in the coming decades. But it will remain a tool under human command rather than develop a will of its own.